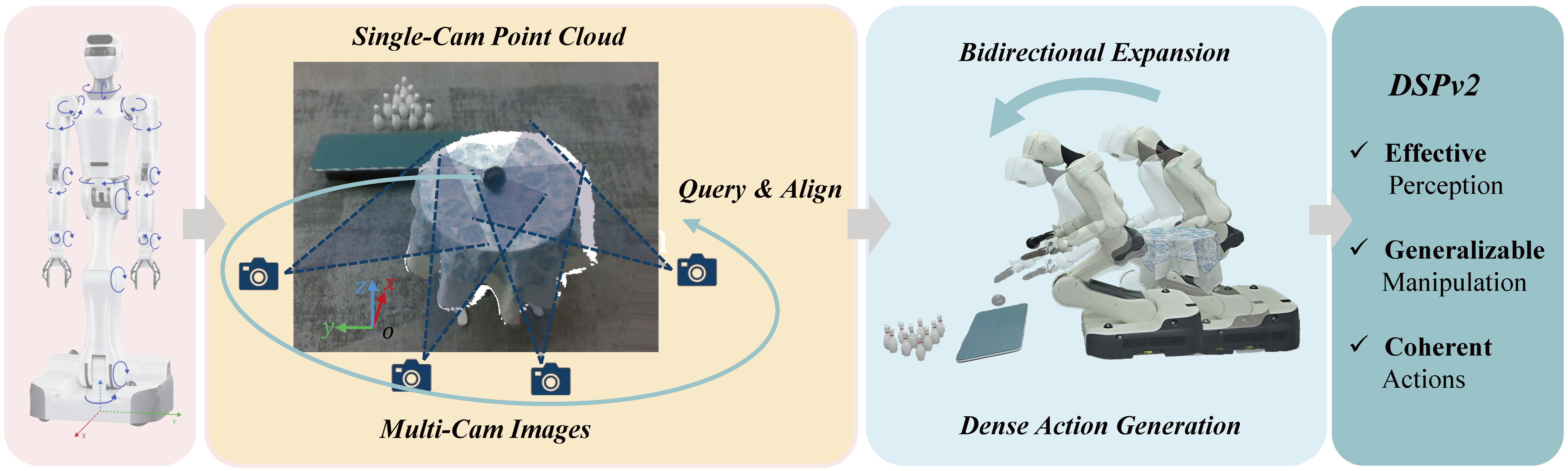

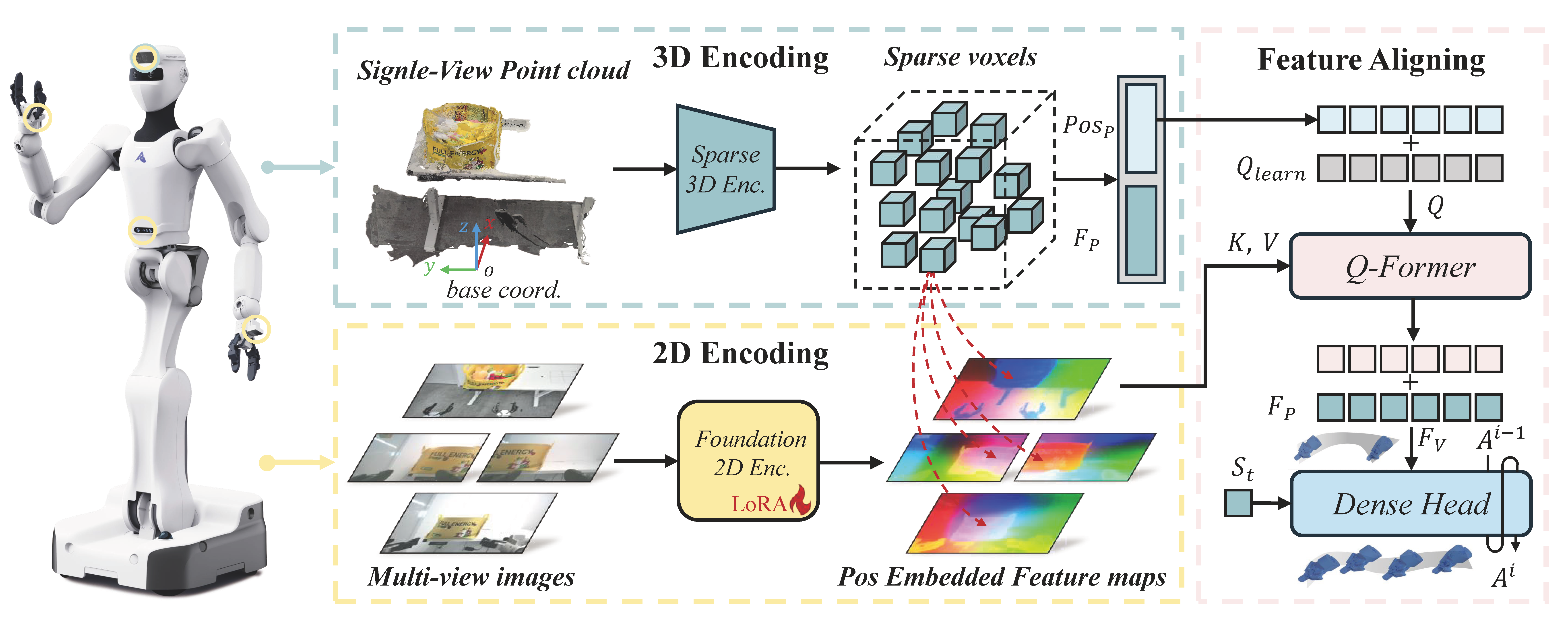

DSPv2 is a whole-body mobile manipulation policy that achieves generalizable performance by fusing multi-view 2D semantic perception with 3D spatial awareness, and generates coherent whole-body actions via dense action head.

Pick and Place

After Light Down and Table Lifted Up

In-domain Scene

In-domain Scene

Out-of-domain Scene

Bowling

Original Buckets' Size

All Buckets' Size Changed

Original Setup

Obeject Color Change

The videos shown above are all 4x speed. DSPv2 demonstrates the ability to fully utilize observation information and achieve coherent whole-body manipulation. It also achieves generalization capabilities for lighting, spatial arrangement, object color and shape, and scene changes. However, its limitations are also very obvious.

When a large domain shift occurs, such as when the test robot is different from the training robot and the manipulating platform changes (As in Deliver), its generalization ability will be very limited and affect the stability of the whole-body actions. We believe that introducing higher-frequency modalities to help policies achieve more robust generalization performance is the key to solving this challenge in the future.

@article{dspv2,

title={DSPv2: Improved Dense Policy for Effective and Generalizable Whole-body Mobile Manipulation},

author={Yue Su and Chubin Zhang and Sijin Chen and Liufan Tan and Yansong Tang and Jianan Wang and Xihui Liu},

journal={arXiv preprint arXiv:2509.16063},

year={2025}

}